In the ever-evolving landscape of data engineering, efficient batch processing has become a cornerstone for businesses striving to harness the potential of their data. At the heart of this endeavor lies Apache Airflow, a powerful tool revered for its flexibility, scalability, and reliability. In this comprehensive guide, we delve into the intricacies of Apache Airflow, exploring its capabilities, implementation strategies, and real-world applications for batch processing scenarios.

Understanding Apache Airflow: A Robust Framework for Workflow Orchestration

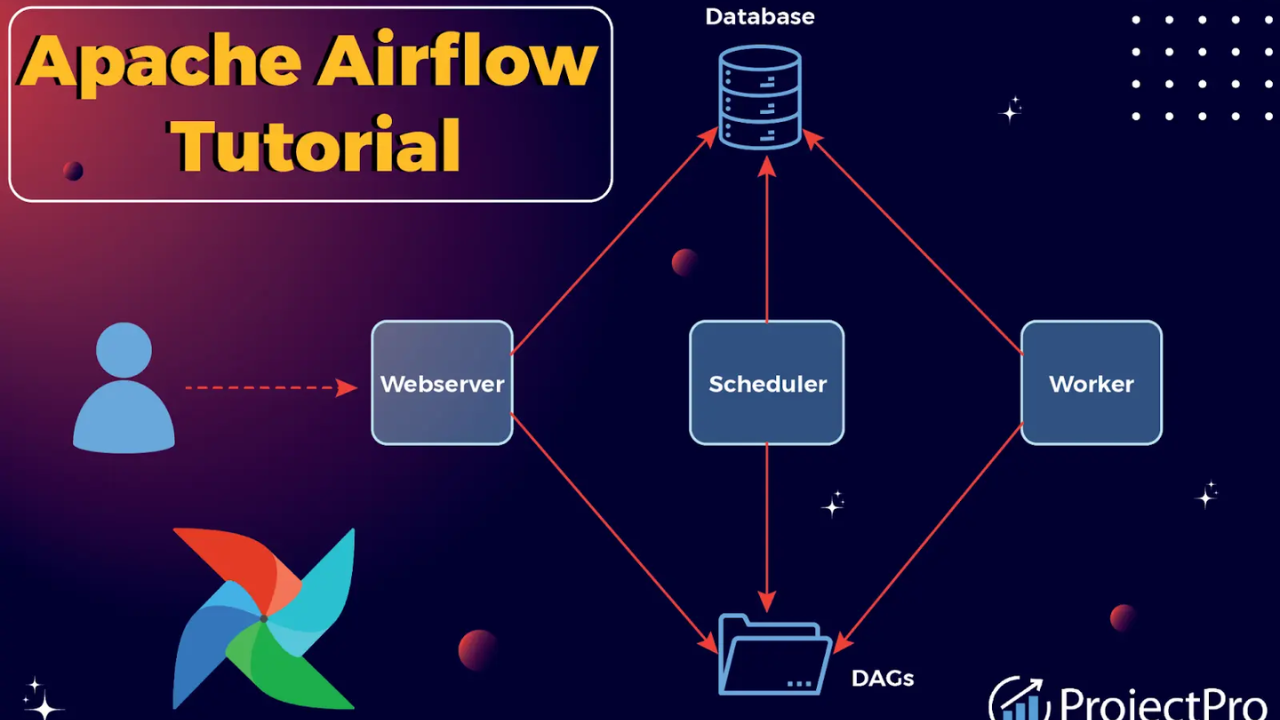

Apache Airflow stands as a testament to the evolution of workflow orchestration tools. Developed by Airbnb, it offers a unified platform for defining, scheduling, and monitoring workflows, thereby empowering organizations to streamline their data pipelines with unparalleled efficiency. At its core, Airflow operates on the principle of Directed Acyclic Graphs (DAGs), allowing users to define complex workflows with ease.

Key Features of Apache Airflow

- DAGs (Directed Acyclic Graphs): The cornerstone of Airflow’s architecture, DAGs enable users to visualize and manage complex workflows effortlessly.

- Dynamic Task Dependency: Airflow’s dynamic task dependency mechanism allows for conditional execution, ensuring seamless workflow orchestration.

- Extensible: With a rich ecosystem of plugins and integrations, Airflow can be extended to support a wide range of use cases and integrations.

- Scalable: Built for scalability, Airflow can handle workflows of any size, from small-scale prototypes to enterprise-grade production pipelines.

- Fault Tolerance: Airflow’s robust fault tolerance mechanisms ensure reliable execution, even in the face of failures or system errors.

Implementing Apache Airflow for Batch Processing

The versatility of Apache Airflow makes it an ideal choice for batch processing scenarios across various industries. Whether it’s ETL (Extract, Transform, Load) pipelines, data warehousing, or report generation, Airflow excels in orchestrating complex batch workflows with precision and efficiency.

Read more: Accelerate Innovation By Shifting Left Finops, Part 2 | Ensuring Consistency: Testing Websites in Different Browsers

Step-by-Step Implementation Guide

- Define Workflow: Begin by defining your workflow as a Directed Acyclic Graph (DAG), outlining the sequence of tasks and their dependencies.

- Configure Operators: Leverage Airflow’s extensive library of operators to define individual tasks within your workflow. From simple bash commands to sophisticated data processing tasks, Airflow offers a wide range of operators to suit your needs.

- Set Up Connections and Hooks: Establish connections and hooks to interact with external systems and services, such as databases, cloud storage, or APIs.

- Schedule and Monitor: Utilize Airflow’s intuitive UI to schedule and monitor your workflows, ensuring timely execution and seamless operation.

- Handle Errors and Retries: Implement error handling and retry mechanisms to ensure fault tolerance and robustness in your batch processing workflows.

Real-World Applications of Apache Airflow in Batch Processing

The versatility and scalability of Apache Airflow make it a popular choice for batch processing across various industries. Let’s explore some real-world applications where Airflow shines:

ETL Pipelines

Apache Airflow simplifies the orchestration of ETL pipelines, enabling organizations to extract data from multiple sources, transform it according to their business logic, and load it into their data warehouse or analytics platform.

Read more: Best Practices for Testing Sites on Mobile Devices | Automation Testing in Agile Environments: Best Practices

Data Warehousing

For organizations managing vast volumes of data, Airflow provides a reliable framework for automating data warehousing processes, including data ingestion, transformation, and aggregation, thereby empowering data-driven decision-making.

Report Generation

With Airflow, organizations can automate the generation and distribution of reports, dashboards, and insights, streamlining the reporting process and ensuring timely delivery of critical business information.

Conclusion

In the era of big data, efficient batch processing is essential for organizations seeking to derive insights and drive innovation from their data assets. Apache Airflow emerges as a game-changer in this domain, offering a robust framework for orchestrating complex batch workflows with ease and reliability. By harnessing the power of Airflow, businesses can unlock new opportunities for data-driven growth and competitive advantage.